Agentic AI is finally out of the lab and onto my desktop. This week felt like a plot twist in two parts, and it completely changed how I’d tell a beginner to jump in without getting burned.

What changed this week and why I care

On Feb 2, 2026, TechCrunch reported that OpenAI launched a new macOS app aimed at agentic coding. That matters. Most of us have been duct-taping agents inside browsers, notebooks, or VS Code. A native app signals OpenAI wants agentic workflows to feel local, fast, and always on. If you build on a Mac, it’s a clean on-ramp.

That same day, eFinancialCareers noted that Brevan Howard’s agentic AI spinout seems to have disappeared. Tough headline. It’s a reminder that hype meets compliance, and sometimes compliance wins. If a top-tier hedge fund can switch lanes, so can your startup pilot.

Put together, it looks like maturity and consolidation in the same breath. Better infrastructure closer to your desktop. Fewer flashy side bets. That’s exactly the window I like to enter a new space.

I jump into new spaces when solid infrastructure meets fewer side bets; that’s my cue to start building.

Agentic AI in plain English

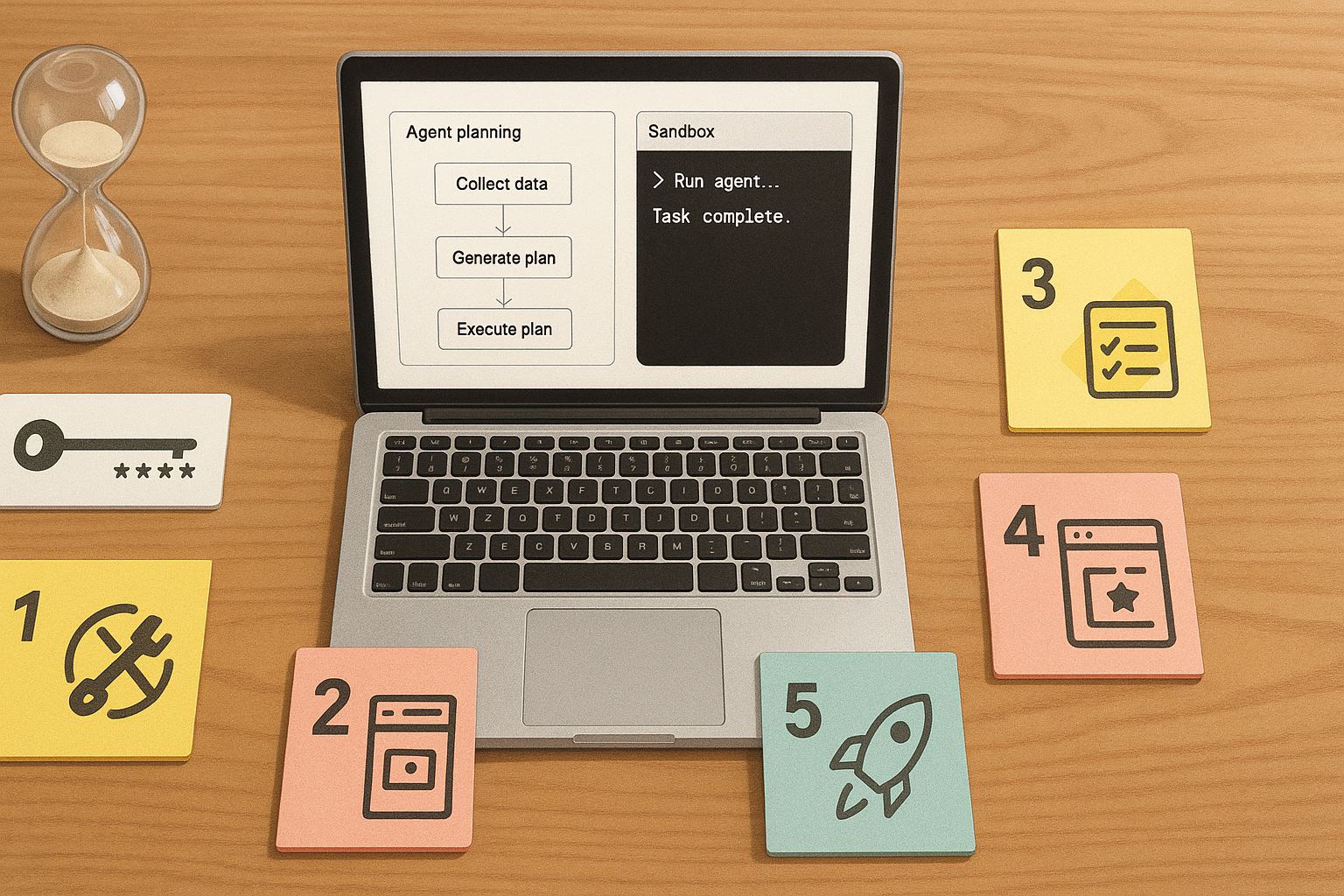

Agentic AI plans and acts. You give it a goal. It breaks the work into steps, picks tools, calls APIs, writes code, checks results, and loops until it’s done. It’s not autocomplete. It’s a junior assistant that actually runs tasks end to end.

I treat agentic AI like a junior assistant that plans and acts, not autocomplete.

Early demos felt magical but brittle. Tool hallucinations, loops, and logs that read like fever dreams. What’s different now is practical agentic AI. Tighter scopes, better guardrails, and real UX so it feels like a helpful app, not a research toy.

Why the OpenAI macOS app matters

I’ve run agents in terminals, Docker, and a graveyard of tabs. It works, but the context switching is brutal. A native macOS app for agentic coding, shipped on Feb 2, 2026, checks a few boxes I care about:

- Low friction: one place to think, plan, run, and iterate.

- System-level access: sensible hooks into files, windows, and dev tools so Automation is actually useful.

- Consistency: a shared runtime from the model maker means fewer weird edge cases.

I don’t see it replacing your whole stack. I see a launchpad. If you’re new to Agentic AI, a sane default on Mac drops the time from idea to working prototype.

The hedge fund spinout that went quiet

Also on Feb 2, 2026, eFinancialCareers said Brevan Howard’s agentic AI spinout seems to have vanished. I don’t know the backstory. What I take from it is simple: enterprise agents hit real constraints like security, latency, audit, and model drift. If your first agent dies in compliance review, the idea isn’t dead. You just need tighter scope, better logging, and fewer wishful assumptions.

If compliance kills the first draft, I tighten scope, logging, and assumptions instead of abandoning the idea.

I treat this as a feature. Small, focused agents can create outsized value while the moonshots figure out governance. Start tiny. Prove value. Then scale.

How I’d start with Agentic AI this week

Pick one painful workflow

Agents flop when they try to do everything. Choose a task you repeat: scraping a vendor price list, migrating docs into a knowledge base, summarizing meeting notes into ticket drafts, or checking test coverage and opening issues. If you can outline the steps on paper, an agent can probably do most of it.

Decide the tools upfront

Write down exactly what the agent can touch. For coding: read and write to a local folder, run sandboxed shell commands, and hit one or two APIs with scoped keys. For research: fetch pages, extract structured data, save a CSV. Fewer tools, fewer ways to get stuck.

Prototype in a guided UI

On a Mac, try the new OpenAI app to stand up an agentic coding flow quickly. On Windows or Linux, use the environment you trust. The logo doesn’t matter. The feedback loop does. You want to watch the plan, see tool calls, and step in when it drifts.

Log everything, then tighten

Keep a simple log of prompts, plans, tool calls, and outputs. When it works, capture the inputs and outputs. When it fails, capture that too. After a day, patterns pop. Add guardrails. Cap tool calls per run. Require a plan summary before execution. I treat this like unit tests for an intern.

Ship one tiny win

Don’t build a self-driving company agent. Ship something boring that saves 30 minutes a day. That dependable win funds the next two agents. It’s how you avoid ambitious projects that quietly vanish.

Tools I actually reach for

I keep my Agentic AI stack boring and replaceable. If you have the new macOS app, use it as a sandbox for agentic coding. If not, any setup that supports planning plus tool use will do. I care about three ingredients:

- A model that can plan steps and call tools, not just chat.

- A simple tool registry with clear input and output schemas.

- Local or project-level storage for files, logs, and artifacts.

The trick isn’t the fanciest graph. It’s giving your agent enough power to be useful and enough structure to be reliable.

I give agents enough power to be useful and enough structure to be reliable.

What the disappearing spinout taught me

That headline reminded me to make every agent auditable from day one. If compliance knocks, you should be able to show the prompt, the plan, the tools touched, and the artifacts produced. If you can’t, it’s not production-ready. That’s hygiene, not fear.

If compliance knocks, I want to instantly show the prompt, plan, tools, and artifacts.

When I move an agent beyond my laptop, I add default guardrails: role-based access for tools, timeboxed runs, and human-in-the-loop checkpoints for anything touching customer or financial data. Funny enough, those guardrails make agents more predictable and faster to iterate.

What I’m watching next

After the Feb 2, 2026 macOS news, I’m watching three signals. First, more local-first agent capabilities that work offline or with minimal cloud. That would make agents feel like true desktop utilities, not fancy chat windows. Second, clearer patterns for agent logs, retries, and evaluation that beginners can copy. A couple of good templates save weeks. Third, sober case studies from large orgs that show where agentic AI saved time and where it didn’t. Give me a boring productivity chart over a hype reel any day.

If you only take one thing

Agentic AI isn’t a future thing. It’s here, on your desktop, with a native app option on macOS as of Feb 2, 2026. Not every agent venture will make it. That’s normal. Your edge as a beginner is small scope and fast loops. Pick a narrow task, give your agent just a few tools, log everything, and nudge it until it earns its keep.

I’m building in that spirit this month. If you try the new OpenAI app or spin up your first agent, tell me what tripped you up. The rough edges are where the real learning happens, and right now the best practices are being written by the folks who shipped this week.