Agentic AI is moving fast, so I spent today deep-diving it for you

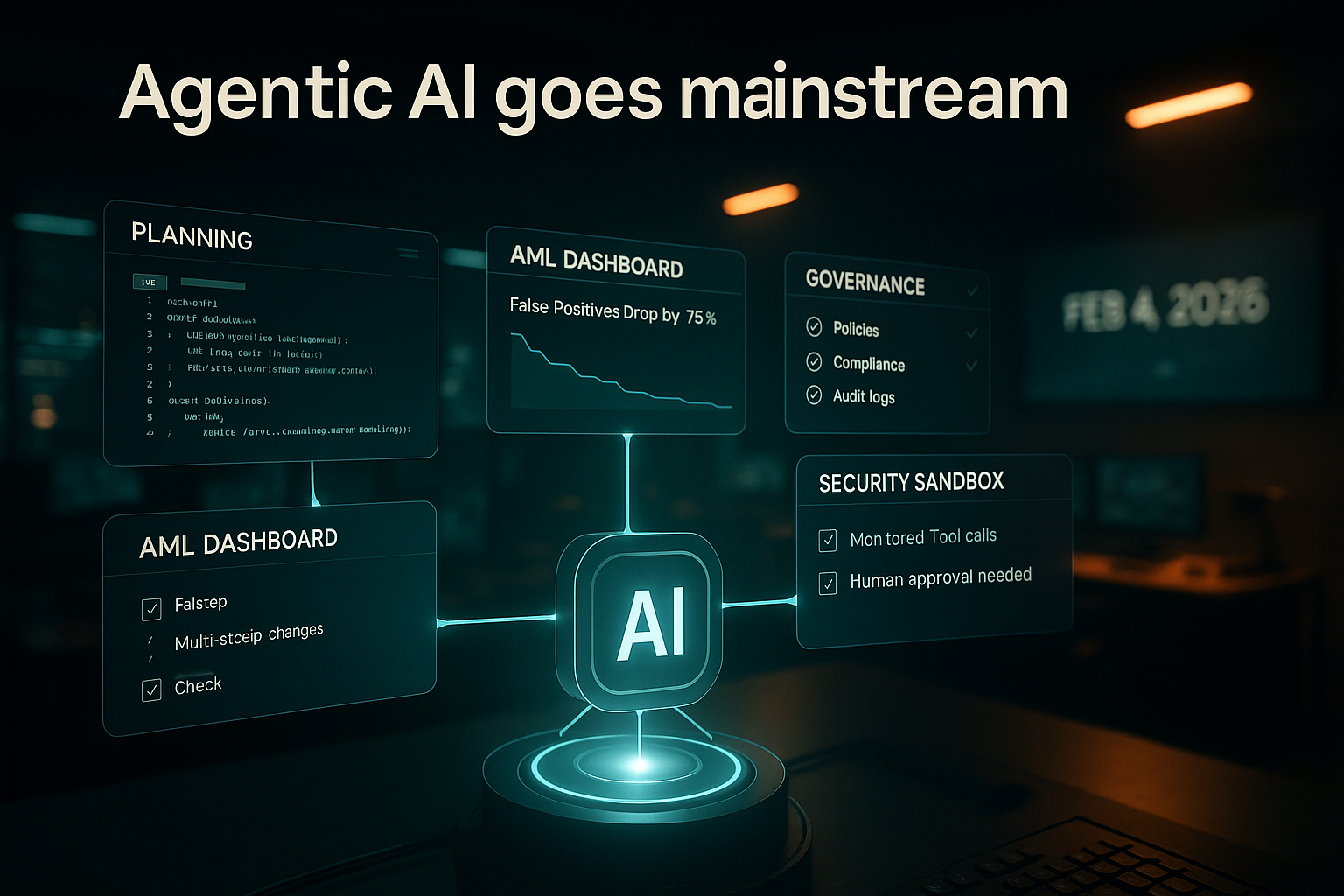

Agentic AI isn’t just autocomplete. It plans, calls tools and APIs, writes files, runs workflows, and checks itself. I’ve been testing agents quietly for months, and Feb 4, 2026 dropped a pile of updates that finally made it feel mainstream.

The quick answer

Agentic AI is crossing the line from demo to daily work. On Feb 4, 2026, Apple’s Xcode 26.3 added native agentic coding, Taktile reported a 75% drop in AML false positives, AI Expo 2026 pushed governance and data readiness, SolarWinds’ CTO outlined secure agent design, and Forbes pressed the accountability question. If you start small and instrument everything, you can get wins this month.

I start small and instrument everything so I can get wins this month.

Apple just made agentic coding feel normal

Xcode 26.3 brings native agentic support

On Feb 4, 2026, Apple shipped Xcode 26.3 with integrated agentic coding. Your IDE can now plan multi-step changes, manage refactors, run tests, and report back, not just finish a line of code. InfoWorld’s coverage made it clear this isn’t a toy plugin glued to the side.

Why I care: once agentic behavior lives inside the tool I’m already in all day, adoption stops being a bet and becomes muscle memory. Even if you’re not building for Apple platforms, the signal is obvious. Big platforms are normalizing agents in the dev loop.

When agentic behavior lives inside the tools I already use all day, adoption becomes muscle memory.

Real numbers beat hype

Taktile’s agent cut AML false positives by 75%

Also on Feb 4, 2026, Taktile announced an agentic AML platform claiming a 75% reduction in false positives. If you’ve worked AML, you know the pain of alarms that never stop. Cutting three out of four is budget, morale, and speed in one swing. Here’s the AML Intelligence write-up.

The pattern is what matters. The agent doesn’t replace investigators. It orchestrates steps, gathers context, ranks risk, and escalates cleanly. If you’re building your first operations or support agent, copy that playbook: tight scope, reversible actions, crisp handoffs.

I copy the AML playbook: tight scope, reversible actions, crisp handoffs.

Governance and data readiness decide who wins

AI Expo 2026 put the focus where it belongs

At AI Expo 2026 Day 1 on Feb 4, the headline wasn’t a shiny model. It was governance and data readiness as the real unlock for agentic enterprise. That matches what I see in the wild. Agents don’t fail because they can’t call APIs. They fail because permissions are wrong, data is messy, or compliance got ignored. See the AI News recap.

My Monday-morning checklist is painfully simple: map the data, grant least-privilege access with audit logs, define exactly which actions are reversible, and write escalation rules as a checklist the agent can actually follow. Boring wins here.

I map the data, grant least-privilege access with audit logs, define exactly which actions are reversible, and write escalation rules the agent can actually follow.

Security by design, or don’t ship it

What SolarWinds’ CTO spelled out

Also on Feb 4, 2026, SolarWinds’ CTO broke down how they design secure AI agents: treat agents like services, scope credentials, isolate execution, monitor every tool call, and keep a human in the loop for high-risk actions. The BankInfoSecurity piece matches what I recommend when I review agent plans.

Put the agent in a sandbox. Force authentication to every tool, including internal ones. Log everything. Add rate limits and circuit breakers to actions that change data. Brilliant LLMs still make a single disastrous call if you forget a constraint. Guardrails are controls you can point to.

I put the agent in a sandbox and force authentication to every tool. I log everything.

Accountability isn’t abstract

Forbes asked the uncomfortable question

Also on Feb 4, 2026, Forbes asked who answers when an AI agent gets it wrong. The vendor? The model provider? The team that deployed it? My default is simple: act like the liability is yours until a contract says otherwise. Here’s the Forbes article.

Design for explainability. Make agents write decision logs in plain language, attach the evidence, and produce a human-readable summary for every non-trivial action. When something goes sideways, you’ll want a narrative, not just a stack trace.

I design for explainability with plain-language decision logs, attached evidence, and a human-readable summary for every non-trivial action.

If I were starting this week

Here’s exactly how I’d ramp Agentic AI in under 30 days without boiling the ocean. Keep it small, measurable, and safe, then widen the scope only after the logs look boring.

- Pick one boring, frequent, reversible workflow you own, like weekly reporting or triaging support emails.

- Draw a tight action boundary: what the agent can do, what needs your click, and what is off-limits.

- Instrument everything: save prompts, tool calls, and outcomes, then review twice a week.

- Chase one metric: minutes saved, fewer escalations, or fewer manual edits. Expand only after a clean win.

Practical tips I actually use

Keep the agent’s world small and explicit

Agents get into trouble when we hand them the keys to a messy kingdom. Give them a short tool list with clear contracts. Start read-only, then unlock writes only when logs prove consistent, correct behavior.

Use checklists, not prose

Agents execute better on natural-language checklists than on vague policy paragraphs. If compliance matters, encode the policy as steps and conditions. Keep the official doc for auditors and feed the agent the checklist version.

Design for graceful failure

Add timeouts, retries with variation, and a final ask-a-human escape hatch. Most real wins come from agents that know their limits, not agents that never fail.

FAQ: the questions I keep getting

What is Agentic AI in plain English?

It’s AI that takes actions, not just answers prompts. It plans steps, calls tools and APIs, writes or edits files, and checks its own work. Think of it like a junior ops teammate that follows a playbook inside your environment.

How do I keep Agentic AI safe in production?

Treat agents like services. Scope credentials, isolate execution, log every tool call, and put human approval on high-risk actions. Add rate limits and circuit breakers for anything that mutates data so you can recover fast.

What’s the fastest path to ROI?

Start with one repetitive, reversible workflow you personally own. Instrument everything, chase a single metric, and iterate weekly. Once you get a small win, widen the boundary or add one more tool. That compounding loop sticks.

Who’s liable when an agent makes a mistake?

Assume you are until a contract says otherwise. Build explainability in from day one with decision logs, attached evidence, and human-readable summaries. It speeds audits, incident response, and trust with stakeholders.

Where I’m leaning after today

I’m doubling down on two tracks. First, using agentic coding inside the tools where I already live so I can harvest tiny daily wins without context switching. Second, building narrow, high-signal agents around a single business outcome with explicit playbooks and logs. Feb 4, 2026 confirmed the direction: platform support is here, measurable use cases are real, governance separates winners, security has a playbook, and accountability is now a headline. If you’ve been waiting for a sign to move from prompts to agents, this is it.