Agentic AI just crossed a few real thresholds, and I felt it in my roadmap. I spent the last two days digging into fresh updates and actually changed how I build and ship agents because of them.

Quick answer

Agentic AI is moving from chatty assistants to systems that plan, act, verify, and try again. In Feb 2026 we got real-world proof: self-correcting 3D printing, banks letting agents touch compliance, the first governance framework just for agents, big infra warnings, and credible legal workflows. The winning pattern is boring on purpose: tight loops, strict permissions, fast feedback.

When I feel stuck, I default to tight loops, strict permissions, and fast feedback because the winning pattern is boring on purpose.

3D printers that fix themselves mid print

On Feb 7, 2026, researchers showed an agentic AI system that corrects 3D prints in real time by watching a camera feed, comparing against the model, and adjusting speeds or temperatures instantly. No more returning to a spaghetti mess. I first saw it covered here: Manufactur3D.

Why this matters

This is the agent loop in the physical world: observe, plan, act, verify, repeat. The power isn’t the model’s IQ, it’s the closed loop with sensors and fast feedback. If you’re new to agents, copy that pattern everywhere you can.

I copy this loop everywhere I can: observe, plan, act, verify, repeat.

Try this

Build a micro-agent that fixes one thing only. In OctoPrint, watch first-layer unevenness, then auto-adjust bed mesh or pause for a quick nozzle clean. The goal isn’t perfection. It’s building the loop and feeling the magic of fast retries.

Banks are letting agents touch compliance

On Feb 6, 2026, reports surfaced that Goldman Sachs is letting AI agents handle accounting and compliance tasks like reconciliation, policy checks, and audit-ready logs. This is not a demo. It’s one of the most conservative corners of finance giving agents real work. Read the coverage on PYMNTS.

Why this matters

If agents can survive compliance, they can survive your startup. It’s less about clever reasoning and more about guardrails: role-based access, immutable logs, and crisp human handoffs on edge cases.

I lead with guardrails like role-based access, immutable logs, and crisp handoffs so agents can survive real compliance.

Try this

Take a small expense dataset and build a categorization agent with three hard rules: cite the rule used, add a one-line justification, and route anything unclear to a needs-review folder. You’re practicing transparency, justification, and escalation.

Singapore’s agentic AI governance framework

Also on Feb 6, 2026, Singapore unveiled what it calls the first governance framework focused specifically on agentic AI. Most policies lump agents into generic AI rules. This one talks directly about autonomy, oversight, and kill switches. The announcement was covered by CDO Magazine.

Why this matters

Governance is just deciding how much freedom your agent gets and how you’ll reel it back. Think explicit tool permissions, clear audit logs, and visible off switches. If you bake this in early, experiments scale instead of stall.

Try this

Whenever you give a tool to an agent, write the permission next to it in plain language. Example: this agent can send emails only to addresses on this whitelist and must include a [DRAFT] tag. Log every tool call to a simple CSV. That CSV becomes your first audit trail.

Your infrastructure might be the bottleneck, not the model

On Feb 6, 2026, Cisco flagged infrastructure drag on agentic AI. I feel this in my bones. A brilliant agent on paper can still feel dumb if every tool call waits on a slow API, network jitter, or a busy GPU.

Why this matters

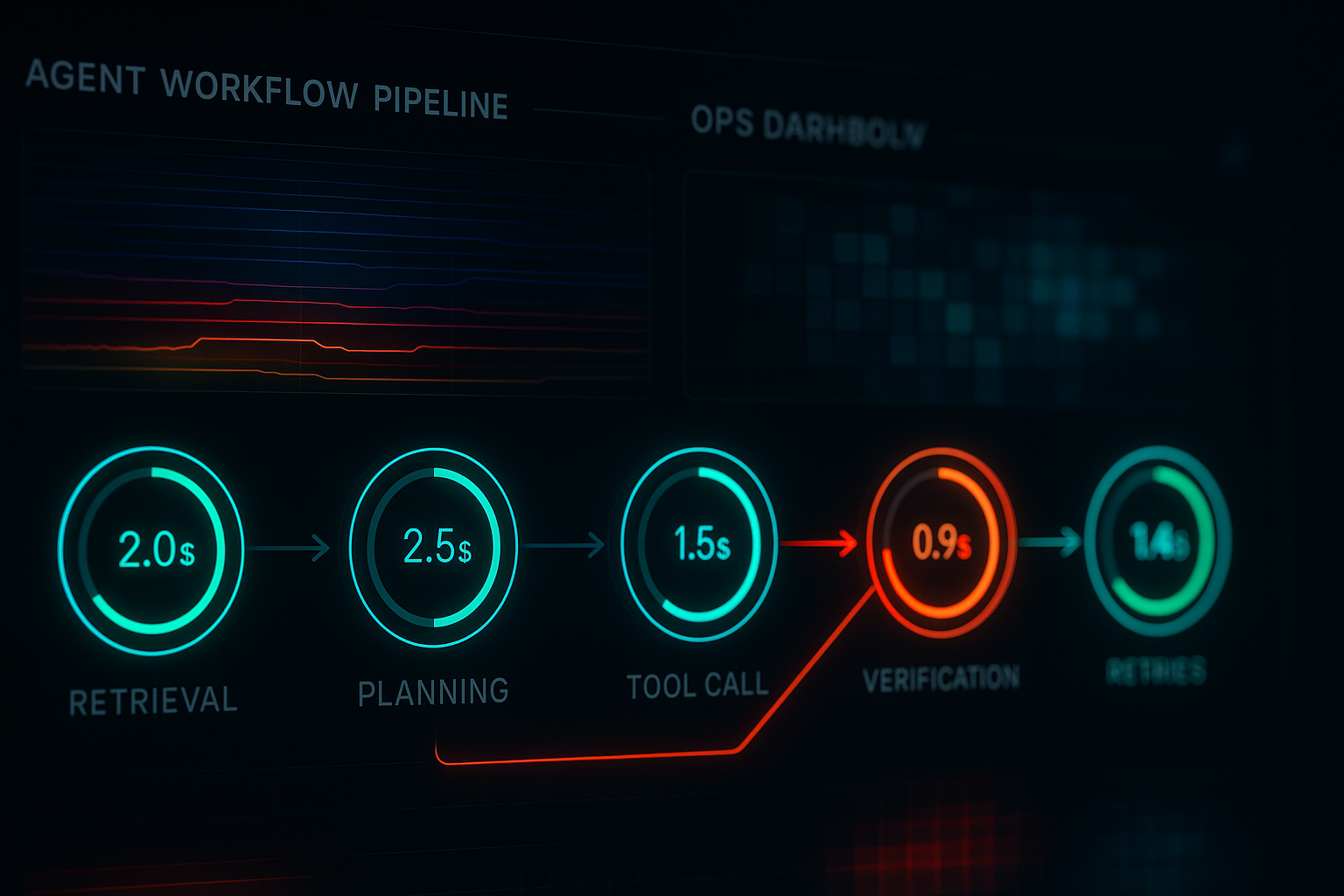

Agent performance is often an ops problem dressed as an AI problem. Latency compounds across planning, retrieval, verification, and retries. If you don’t budget for this, agents time out or start taking riskier shortcuts.

I treat latency like a budget because it compounds across every step in an agent workflow.

Try this

Give your agent a latency budget: 2 seconds for retrieval, 3 seconds for a tool call, 1 second for verification. If a step exceeds budget, degrade gracefully, skip optional checks, or use a cached result. It’s shocking how much snappier agents feel with a simple budget.

Can agents handle junior legal work

On Feb 6, 2026, TechCrunch asked if maybe agents can be lawyers after all. I don’t see attorneys getting replaced, but research, cite finding, clause comparison, deadline tracking, form filling, and supervised first drafts are squarely in agent territory.

Why this matters

Legal is a high-stakes sandbox for good agent design: retrieval that cites sources, strict tool permissions, and clear escalation. If you can make an agent safe here, you can likely port the same design to HR, procurement, security reviews, and any policy-heavy workflow.

Try this

Build a contract review helper that does three things: flags the five clauses that deviate from your template, shows the original text with a one-sentence risk note, and labels risk as low, medium, or escalate. No auto-edits. No emails. Keep it narrow and supervised.

The pattern I keep seeing

The winners aren’t using agents as magic brains. They’re building tight loops, simple permissions, and fast feedback. The tech can be fancy, but the structure is intentionally boring.

- Define the target state in one sentence and how the agent knows it got there

- Grant the smallest tool permissions that still let it succeed, and log every call

- Draw the escalation path and make it obvious when the agent should stop

That tiny checklist turns wild demos into dependable helpers. It scales from a weekend script to an enterprise rollout.

What I’m changing in my own builds

I’m tightening feedback loops and making audit logs first-class. Seeing a bank trust agents with compliance pushed me to prioritize transparency. I’m also budgeting latency per step, because a fast, slightly dumber agent usually beats a smart one that feels sluggish.

Singapore’s move nudged me to write permissions right next to the tool in plain language. It feels like a mini contract. When I bend a rule, it’s visible and a little uncomfortable in the best way.

If you’re just getting started

Pick one domain where errors are safe and feedback is quick. Scheduling, expense triage, pulling status from tickets, proofreading customer replies. Give your agent one tool, one verification step, and one escalation rule. Then iterate. The intimidation fades the moment an agent quietly handles a tiny job better than you do.

I start with one tool, one verification step, and one escalation rule, then iterate.

If you’re worried about regulation, good. Borrow Singapore’s spirit: explicit autonomy, observable behavior, reversible decisions. If you can explain in one sentence what your agent is allowed to do, you’re on the right track.

What I’m watching next

I want to see more physical-world agents like the 3D printer work, plugged into wider production lines. I’m also watching how banks document agent decisions for auditors, because those patterns will translate into healthcare, insurance, and the public sector. And selfishly, I want better local-first tooling so my agents don’t stall on network hiccups.

FAQ

What is agentic AI

Agentic AI is software that can plan, take actions with tools or APIs, check its own work, and try again. Think of it as less chat, more doing. The loop is observe, plan, act, verify, repeat.

Is agentic AI safe for beginners

Yes, if you use guardrails. Start with the smallest useful tool permission, log every action, and set a clear escalation rule. Keep your first agents narrow and supervised.

How do I add governance without slowing down

Write permissions in plain language next to each tool, log calls to a simple CSV, and define a visible off switch. This adds minutes to setup and saves hours of debugging and compliance later.

How do I manage latency in agent workflows

Create a per-step latency budget and decide how to degrade when a step runs long. Cache results, skip optional checks, or switch to a lighter model. Your agent will feel faster and fail more gracefully.

Can agentic AI replace lawyers or compliance teams

No, but it can take on structured, supervised tasks. Research, clause diffs, deadline tracking, and form filling are realistic today. Humans still make the final calls, especially in high-stakes scenarios.