Agentic AI finally clicked for me this morning. Agentic AI stopped feeling like a flashy demo and started looking like the new default for real work.

Quick answer: On Feb 9, 2026, five signals landed across retail, healthcare, data infrastructure, governance, and cloud tooling that point to agentic AI going mainstream. If you want something working this week, give your agent a tight job description, add a simple memory layer, cap spend, and ship a tiny workflow you can review and improve daily.

Quick refresher: what is agentic AI?

Think of an AI that pursues a goal rather than just answering a question. It plans steps, uses tools and APIs, calls services, checks its work, and asks for approval when needed. Instead of “write me an email,” it is “compare three vendors, pick the best under my budget, then draft and schedule the outreach with citations.”

I think of agentic AI as an assistant that pursues a goal, plans steps, uses tools and APIs, checks its work, and asks for approval when needed.

Signal 1: Retail just opened the front door to agents

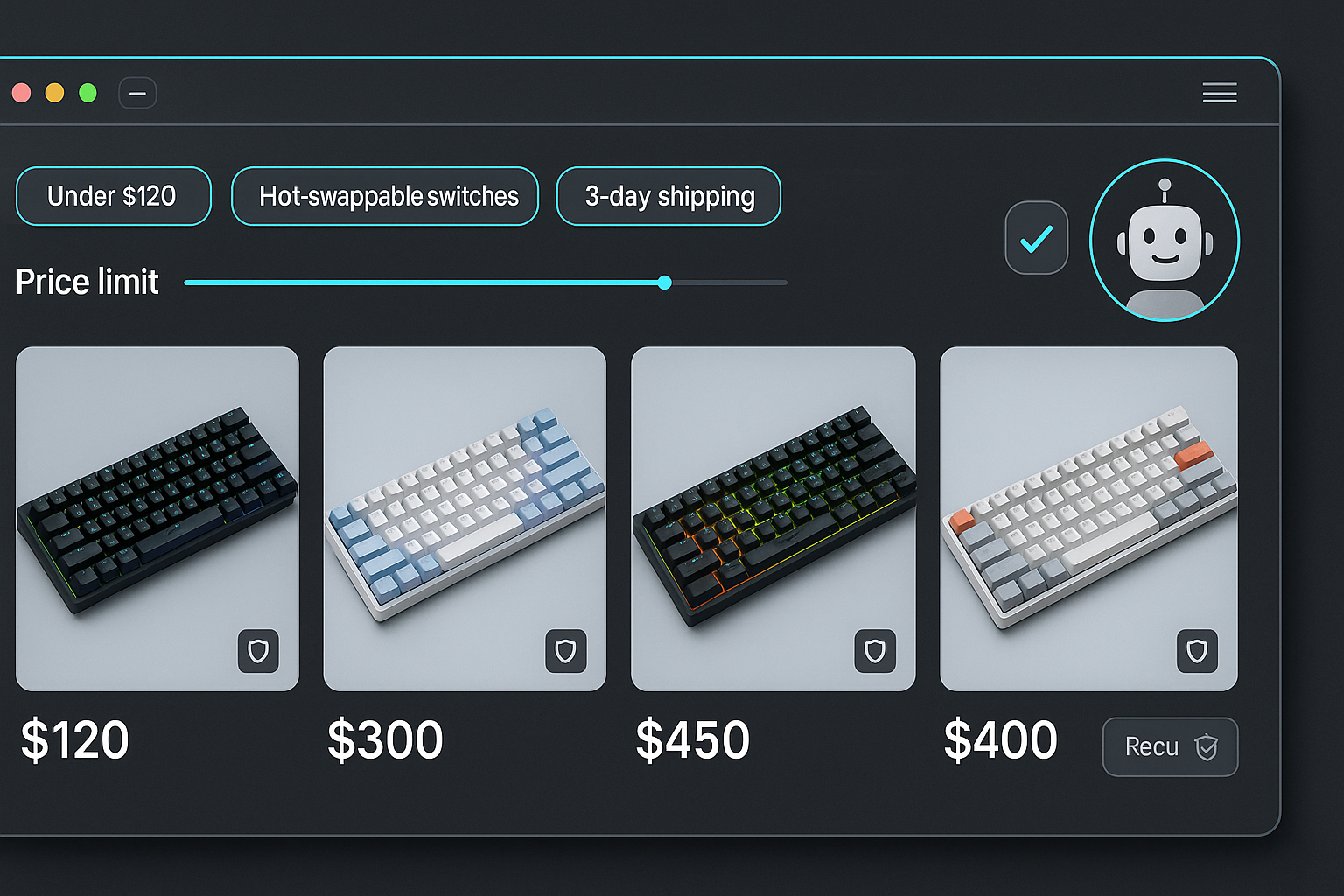

On Feb 9, 2026, Microsoft published how agentic commerce becomes the new front door to retail. Translation in my head: the shopping journey starts with your agent, not a search bar. Discovery, comparison, and even checkout can run inside the constraints you set.

This matters because retail kills bad ideas fast. If big players reframe the entry point to buying as an agent, the experience bar for everyone moves up. I’d practice on a tiny “buyer’s assistant” that tracks specs, price ceilings, return policies, and only pings me when a match appears. Agents shine when constraints are set upfront.

I set constraints upfront because agents shine when the specs, price ceilings, and approval points are clear.

What I’d try

I’d start with one narrow goal like “find me a mechanical keyboard under 120 dollars with hot-swappable switches that ships in 3 days.” Define the steps, the sites it is allowed to visit, and exactly when it must ask for approval. It feels very different from basic chat.

Signal 2: Healthcare is putting agents to work

Also on Feb 9, 2026, Medable announced an AI agent for clinical trials. Healthcare is not casual about new tech, which tells me two things: the productivity upside is real, and the oversight is tight. Trials are full of protocols, eligibility checks, documents, and compliance reviews. If an agent can safely support that, it can probably handle your onboarding flow, vendor evaluation checklist, or data QA with the right guardrails.

I treat clinical-trial adoption as proof that the productivity upside is real and the oversight is tight, so I add guardrails before I add scope.

Signal 3: 7 billion dollars says memory and orchestration matter

Later that day, PYMNTS reported Databricks raised 7 billion dollars for an “AI agent database” focused on memory and orchestration. Long-running agents need durable state, secure tool access, and an explainable history of decisions. That is as much a data problem as a model problem. Link if you want to dive deeper: Databricks’ AI agent database.

What this unlocks

Agents that remember why they chose path A over B, pick up where they left off, compare today’s plan to last week’s results, and justify changes. That is where a toy turns into a teammate.

Signal 4: Manage agents like people, not pets

Also on Feb 9, 2026, PYMNTS highlighted OpenAI’s view that agents should be managed like human workers. I love this framing. It pushes me to write a job description with role, scope, permissions, budget, outputs, and approval points. Not “do marketing” but “draft three email variations from this brief, spend under five dollars on API calls, save to this folder, then notify me.”

I write a clear job description for my agent with role, scope, permissions, budget, outputs, and approval points to avoid surprises.

Signal 5: AWS shrank the time from idea to demo

Amazon Web Services dropped a full-stack starter template for Bedrock AgentCore on Feb 9, 2026. I am not pushing a specific cloud, but a prebuilt planner, tools, and memory layer means I can skip the yak shaving and jump to behavior. Here’s the announcement: AWS Bedrock AgentCore starter. Templates make the moving parts obvious: planner vs executor, tool calls, memory, and guardrails. Once that clicks, swap in your favorite stack.

So what do you actually do this week?

Here is exactly how I would get a real agent live by the weekend.

I start with one annoying, repeatable task with hard constraints so the first agent can win fast.

- Pick one annoying, repeatable task with hard constraints. Vendor comparisons, newsletter curation, or a weekly expense summary all work.

- Write a tiny job description: goal, allowed tools and sites, budget cap, output format, and when to ask for approval.

- Add a simple memory file or table. Log the last five runs, the choices made, and one sentence on why.

- Stand up a starter project. Use the AWS template above if that is your lane or any stack that can call APIs, log steps, and render a basic UI.

- Run it like a manager. Review logs, approve critical steps, and tighten permissions until it is boring. Boring is good.

The pattern I am seeing

All five signals landed on Feb 9, 2026 and they rhyme. Retail is normalizing agents as the start of the customer journey. Healthcare is proving agents can survive compliance. Infrastructure money is flowing into memory and orchestration. The biggest model players are treating agents like employees with policies. And cloud tooling is flattening the setup curve.

If this were one headline, I would shrug. Together it feels like a phase change. The question is shifting from “Can agents do anything useful?” to “How do we deploy them responsibly, measure them, and scale them?” That is a great moment to start because the guardrails are finally catching up to the hype.

FAQ

How do I keep an agent from going rogue?

Scope and money. Give read-only access to sensitive systems, cap spend, and require approval for anything irreversible. If you would not hand a new intern the keys to production, do not hand them to an agent either.

How do I reduce hallucinations in agentic AI?

Expect them and design for it. Use retrieval for facts, add self-check steps, force citations, and store explanations alongside outputs. When an agent can show its sources and reasoning, you can spot errors fast.

What should I use for agent memory?

Start simple. A local database table or a lightweight vector store that tracks tasks, outcomes, and lessons learned is enough. Once you feel the benefit, grow into a more stateful stack.

How do I manage agents safely from day one?

Write a job description with role, scope, tools, budget, outputs, and approval points. Monitor logs like you would a new teammate’s onboarding checklist. This mindset prevents most headaches.

My plan from here

This week I am turning one of my research workflows into an agent. It will scan a short list of sites, extract key updates, tag by theme, and drop a two-paragraph brief into my notes each morning. Hard budget cap, read-only everywhere, and a prompt that forces citations. If it earns its keep, I will expand memory and add a couple of safe tools.

If you have been waiting for the right time, this cluster from Feb 9, 2026 is your permission slip. Agents are not chatbots with better vibes. They are process engines. Start with one tiny process you care about, manage it like a teammate, and let the results pull you forward.