Agentic AI finally clicked for me this morning. I opened my feeds, took a sip of coffee, and saw real launches across dev tools, chips, networks, storage, and search. Not demos. Actual products. It felt like the day autonomy stopped being a power-user experiment and started showing up where reliability and scale actually matter.

Start tiny: I’ll let an agent own one boring loop with logs and a kill switch.

Quick answer: Agentic AI moved from hype to shipping on Feb 10, 2026 with concrete launches from GitLab, Cadence, Nebius, Telefónica with Nokia, and IBM. If you’re new, start tiny: let an agent handle one boring loop with logs and a kill switch. Favor tools that explain their plan, require citations for retrieval, and move from suggest to auto only after a few safe wins.

Why today felt different

On Feb 10, 2026, five separate drops landed on the same day across the stack. I watched GitLab push agentic AI into delivery, saw Cadence launch an agent for chip design, and read that Nebius is acquiring Tavily for agentic search. Add Telefónica and Nokia exploring network APIs and IBM shipping autonomous storage, and it’s clear the experimentation window is still open, but the best seats are going to teams that start now.

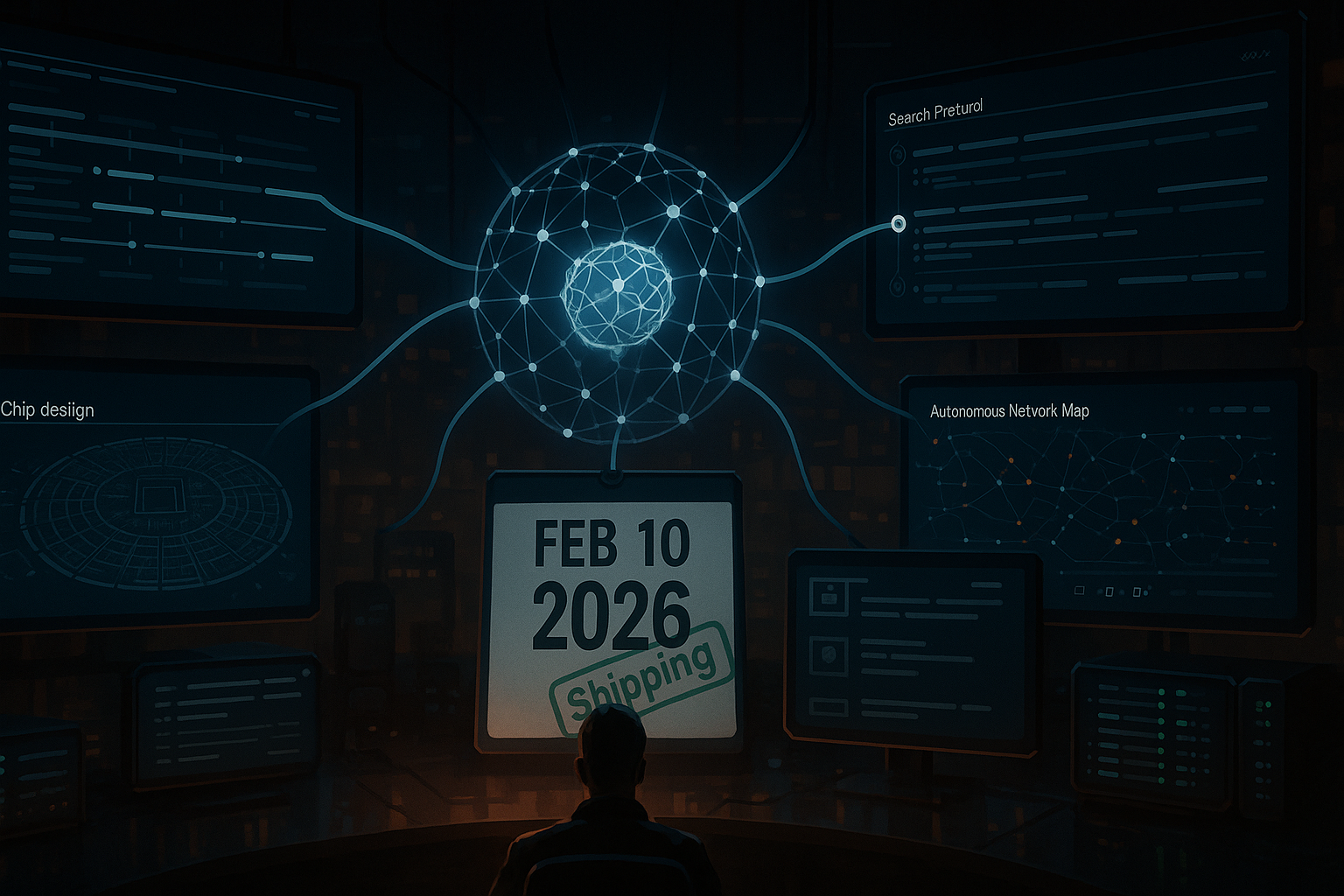

GitLab put agents inside software delivery

What happened

Also on Feb 10, 2026, at its Transcend event, GitLab showed how agentic capabilities can plan, nudge, and glue tasks across the lifecycle. Think less babysitting of CI, code reviews, and releases, more reliable progress inside the tool you already live in.

I’ll stop babysitting CI and let an agent handle the glue so progress stays reliable inside the tools I already use.

Why this matters

It’s not about AI writing your whole codebase. It’s routing the boring parts: opening the right MR template, kicking off the correct test matrix, catching flakes, and drafting release notes. Small loops compound into real time saved.

Try this if you’re new

Turn on the smallest assist. Let an agent propose a pipeline fix or organize a changelog draft. Set tight guardrails and read the logs. Confidence grows fast when it handles glue work you usually forget at 6 pm.

Cadence launched an agent for chip design and verification

What happened

Cadence rolled out ChipStack AI on Feb 10, 2026 to automate portions of design and verification. This is high-stakes work where misses cost silicon spins and quarters, not minutes.

Why this matters

Verification is structured planning and checking, which is an agent’s sweet spot. If autonomy can reduce iteration loops in EDA, it can probably trim your own review cycles and test chores too.

I start with linty, rule-based checks before any judgment calls; the Cadence move just validated that path.

Try this if you’re new

Pick a repetitive review and hand an agent a checklist. Let it draft the first pass, then you approve. Start with linty, rule-based steps before judgment calls. The Cadence move just validated that path.

Nebius is buying Tavily to supercharge agentic search

What happened

Nebius announced the Tavily acquisition on Feb 10, 2026 to strengthen agentic AI search. Tavily is known for planning which sources to query, reading them, and reasoning across them instead of just returning links.

Why this matters

Agents stumble on the open web when context breaks. Agentic search wraps planning, retrieval, and self-checking so results stay grounded. If you want reliable agents, search has to think in steps, not tokens.

Try this if you’re new

Stop pasting prompts stuffed with 20 URLs. Run a retrieval step, feed results in small chunks, and require citations when possible. You’ll see fewer confident wrong answers and more explainable outputs.

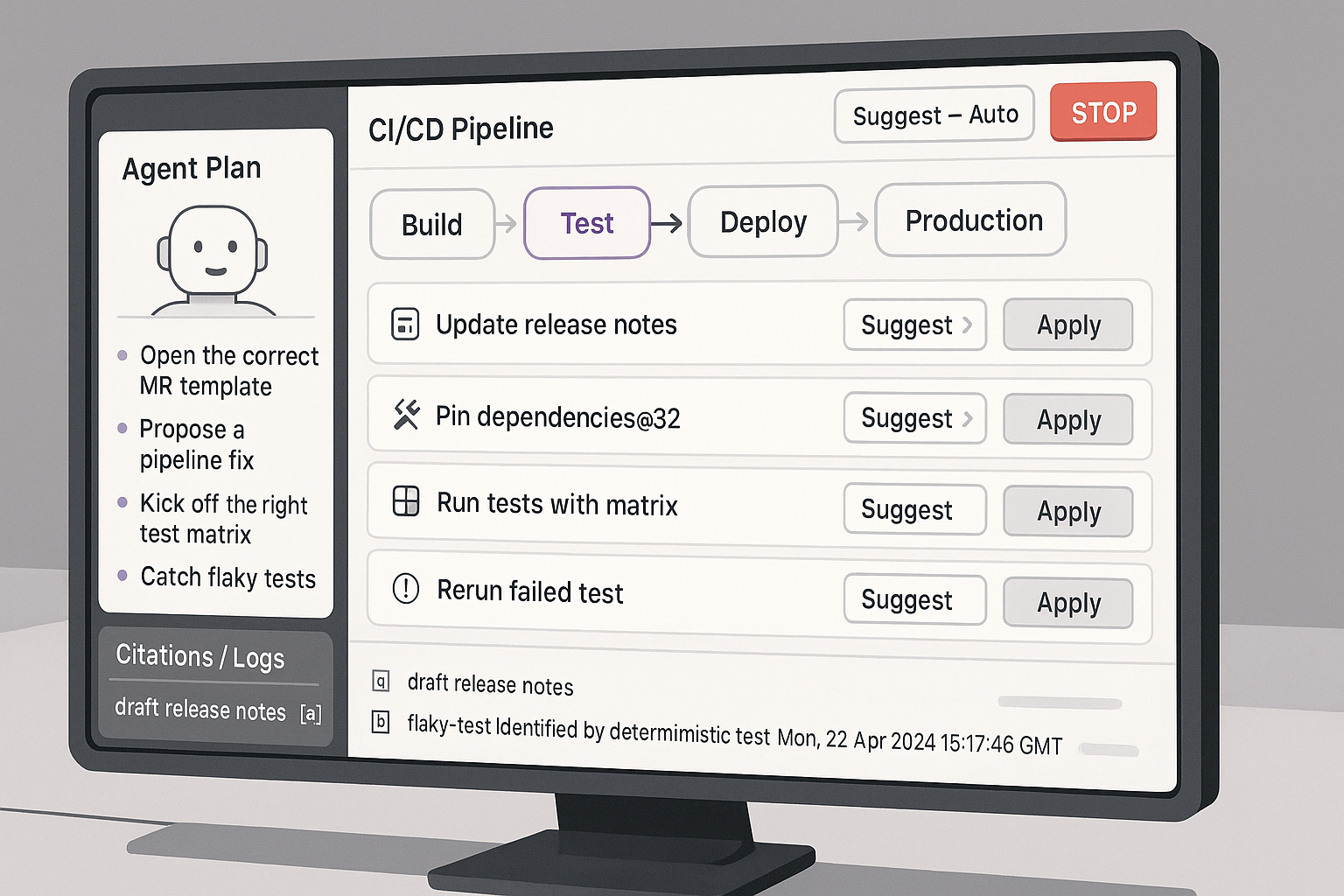

Telefónica and Nokia brought agents to network APIs

What happened

Also on Feb 10, 2026, Telefónica and Nokia discussed applying agentic AI to discover and use carrier-grade network functions via clean APIs. Imagine an app that asks for bandwidth-on-demand or tweaks QoS for a live stream without a human juggling dashboards.

Why this matters

Networks are where theory dies. If agents can adjust network behavior in real time using APIs, we inch closer to autonomous apps that sense needs and self-tune.

I simulate carrier-grade patterns by exposing a tiny internal API and letting an agent watch one metric to scale up or down.

Try this if you’re new

You probably can’t hit carrier APIs yet. Simulate the pattern by exposing a tiny internal API for a queue or cache. Let an agent watch a metric and scale up or down.

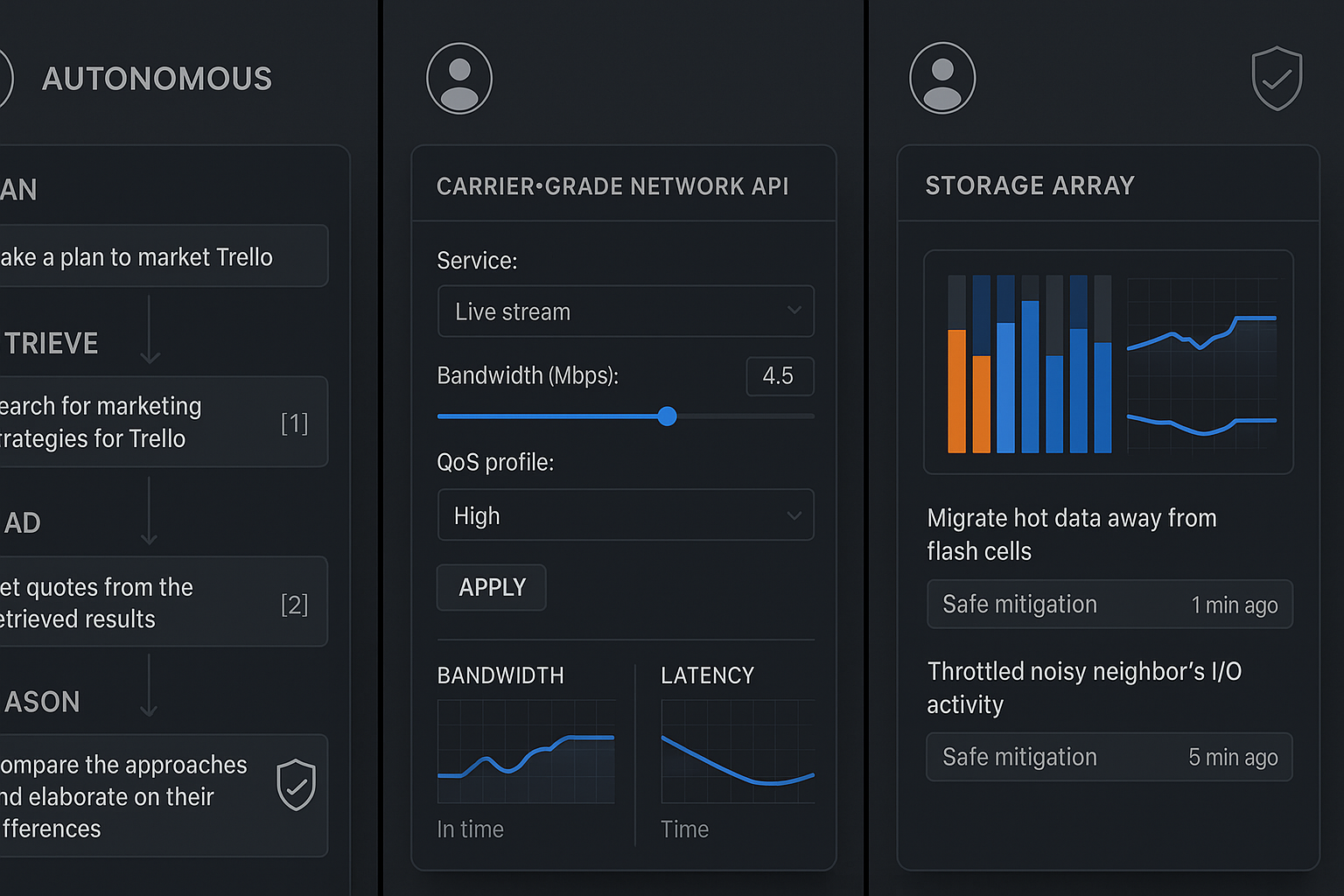

IBM is shipping storage that manages itself

What happened

On Feb 10, 2026, IBM introduced autonomous flash storage powered by agentic AI. Storage is the reliability layer, so shipping agents there signals scoped, auditable loops that can be trusted under load.

Why this matters

Infra hides slow-burn issues like hot spots and noisy neighbors. Agentic storage spots symptoms early and takes targeted actions before it becomes someone’s 2 am page.

I rehearse with safe playbooks, let the agent draft storage actions, and only flip to auto after a few boring wins.

Try this if you’re new

Rehearse with safe playbooks. Let an agent watch disk latency and draft recommendations. You click to apply. After a few boring wins, move to auto-apply within tight thresholds.

What this means for you this week

The same autonomy pattern is landing across delivery, chips, search, networks, and storage. If you start with one scoped loop, you can build trust fast and stack wins without breaking anything.

- Pick one glue task and give an agent ownership with logs, fallbacks, and a kill switch.

- Favor tools that show their plan and cite sources for retrieval-heavy work.

- Start in suggest mode, then graduate to auto with alerts after a few clean passes.

I start in suggest mode and only graduate to auto after a few clean passes with alerts on.

The beginner path I wish I had 6 months ago

Week 1: Make the tiniest loop real

Pick something low-risk like drafting release notes, tagging issues, or summarizing PRs. Success is a clear log and a result you don’t have to rewrite.

Week 2: Add a tool, not more model

Don’t chase bigger models. Give the agent read-only repo access or a search endpoint with citations. Tooling usually beats temperature tweaks.

Week 3: Put a leash on it

Set a time limit, a budget cap, and require review for anything that changes state. Agents love to wander. Your job is to keep them in the yard.

How I’m using today’s news to level up

GitLab nudges me to wire agents into the tools where I already spend hours, not in a sidecar I’ll forget. Cadence pushes me to expand my verification checklists. Nebius and Tavily make me demand planned retrieval and citations. Telefónica and Nokia remind me to expose tiny internal APIs. IBM gives me confidence to flip one or two infra knobs from suggest to apply.

Before you bounce, one honest caution

Agentic AI is powerful, but its failure modes are quiet. It can do the wrong thing and log it beautifully. The teams above are framing autonomy with explainability, guardrails, and scope. Do the same and you’ll sleep fine.

FAQ

What is agentic AI in simple terms?

Agentic AI plans a task, takes actions with tools or APIs, checks its own work, and loops until it reaches a goal. It’s less about chatting and more about doing, with clear steps you can audit.

How do I start using agentic AI at work?

Begin with one repetitive task that is low-risk. Add basic tools like repo read-only access or retrieval with citations. Keep logs, set limits, and require human review for any change to production until you trust the loop.

Is agentic AI safe for production systems?

Yes, if you scope it tightly and make the plan explainable. Start in suggest mode, track metrics, and only graduate to auto within strict thresholds and alerts. The key is auditable steps and fast rollback.

Do I need a big model to see value?

Most gains come from better tooling and clear guardrails. A mid-tier model with retrieval, structured prompts, and good monitoring often beats a larger model with no tools.