I did what I always do when the AI noise gets loud. I made coffee, closed the hype tabs, and went straight to the pieces that matter. On January 30, 2026, two articles landed that made me sit up: a breakdown of Google’s SAGE agentic AI and a plain-English guide to the rising mess of AI protocols. If you care about SEO, automation, or you’re a beginner trying to build your first agent, this is the moment to get oriented without wasting a month on dead ends.

I get oriented now so I don’t waste a month on dead ends.

The 5-minute version

- On January 30, 2026, a Search Engine Journal piece highlighted Google’s SAGE agentic AI research and how it could reshape SEO by evaluating whether content helps users get things done.

- That same day, The Register unpacked AI protocols vying to standardize how agents call tools and talk to each other, which matters if you want choices that will not trap you later.

Here’s how I’m processing it, like I would explain it to a friend over lunch.

Google’s SAGE: why SEO should actually care

SAGE is research, not a product, but when Google studies agentic AI workflows, I pay attention. The shift is simple to say and big to live with: instead of ranking pages that match queries, agentic systems try to complete a task and use your content as part of that workflow. It is closer to asking, can an agent use this page to get something done, than does this headline mirror the keyword.

I think less fluff and more action. I make steps explicit, verify data, and give agents stable artifacts they can reuse.

That nudges SEO toward utility, clarity, and verification. If you publish, think less fluff and more action. Make steps explicit, make data verifiable, and give agents stable artifacts they can extract and reuse.

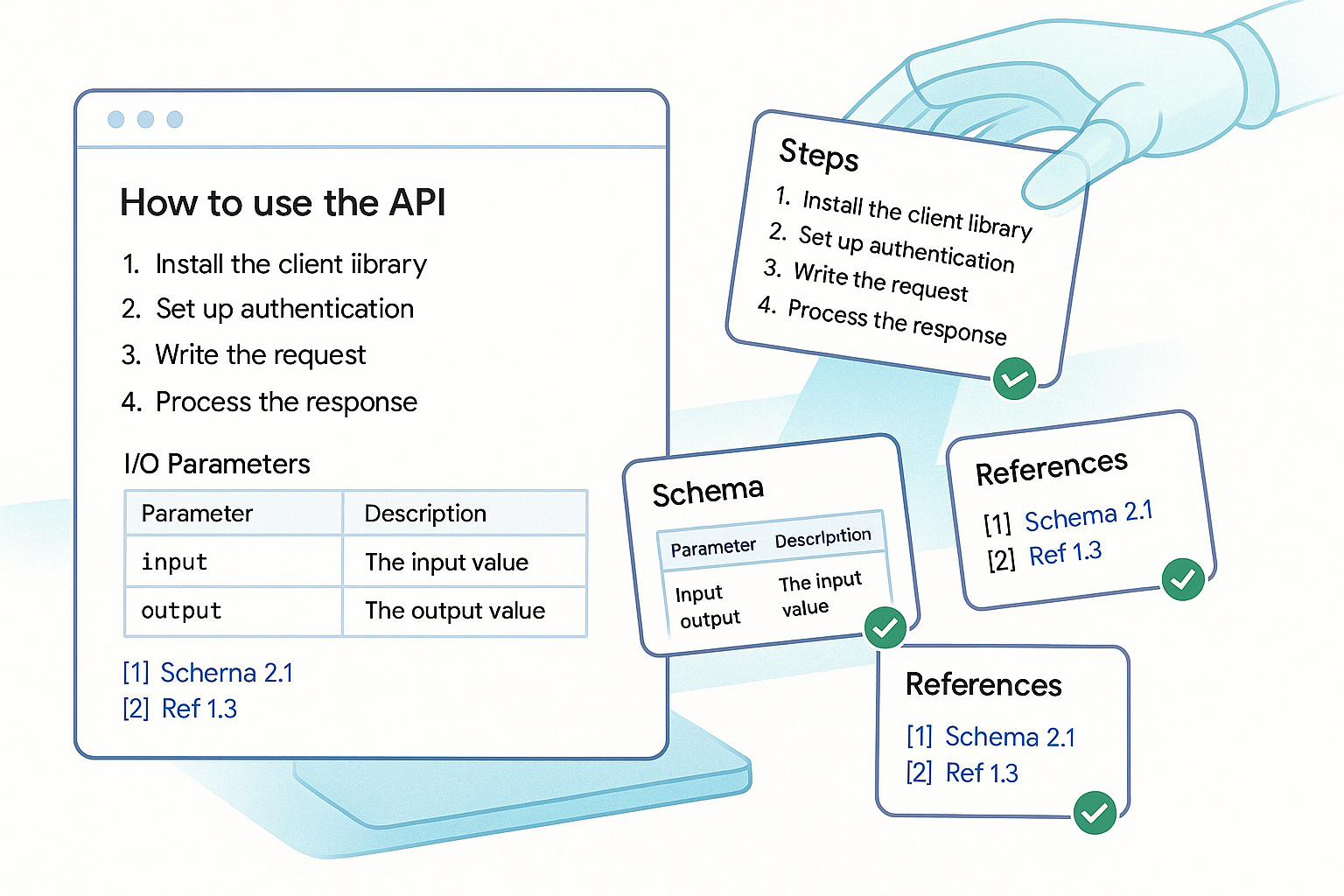

What this means if you publish anything

Spell out steps in a way both humans and machines can follow. If you have a tutorial or a guide, use action-focused headings and include examples, parameters, or tiny code snippets that an agent can reliably pick up. This is classic agentic AI hygiene, and it pays off for beginners and pros.

Anchor claims with links to official docs, version numbers, and structured data where it fits. Showing expected inputs and outputs increases trust and lowers hallucinations. If you cite a price, a spec, or a policy, give the live reference an agent can cross-check.

Show your work with small experiments, sample data, and measurable results. Even a lightweight demo ages better than opinion paragraphs. You are writing for people first, and you are equipping their agents to act.

The AI protocol alphabet soup, decoded

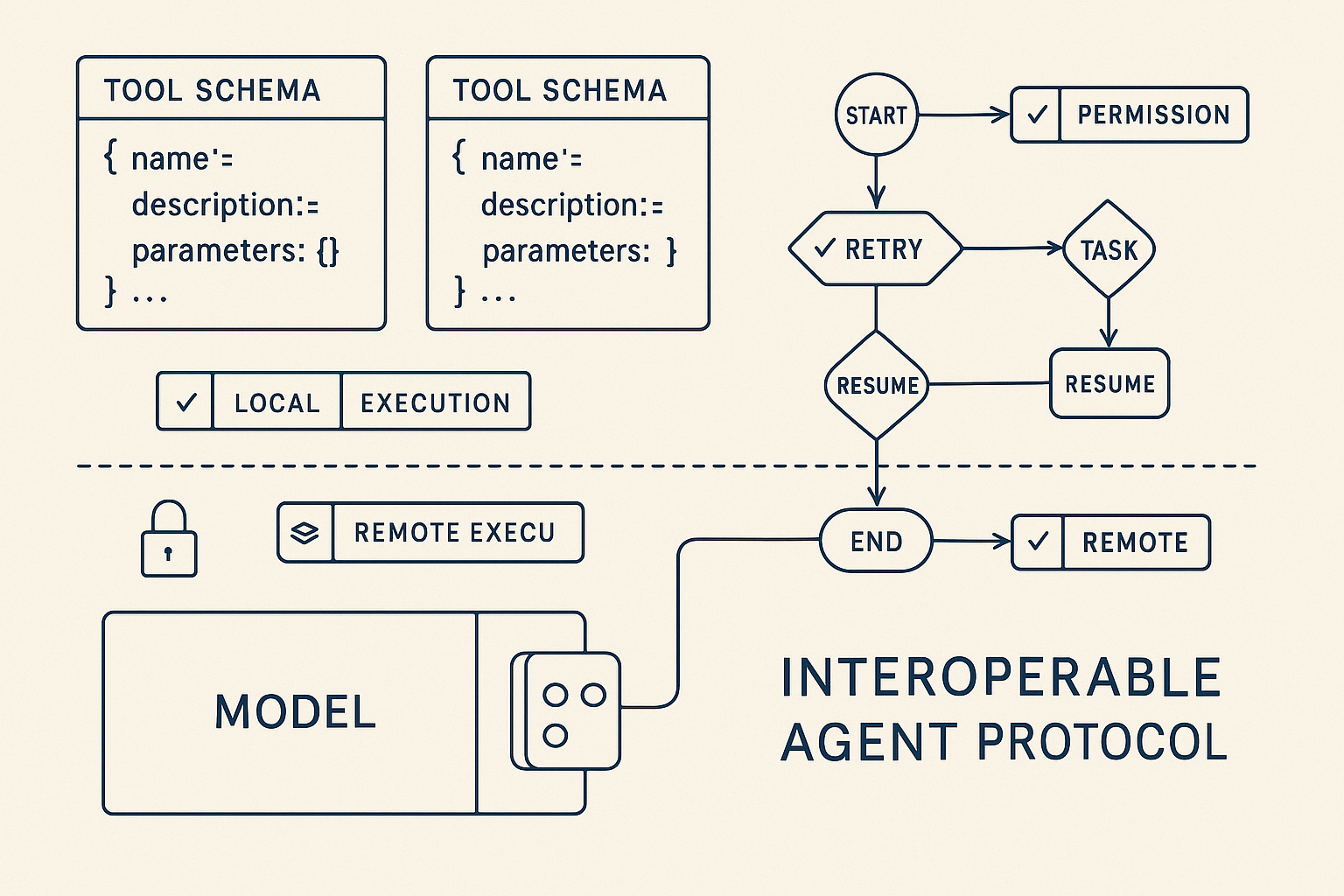

The Register’s January 30 rundown on AI protocols was a breath of fresh air. The promises all sound the same on stage: planning, tool use, collaboration, safety. Under the hood, a few choices will make or break your build.

Start with tool calling. You want clear schemas for tools, predictable interfaces, and a way to swap models without rebuilding everything. If your tools are well-defined, you keep the leverage.

State and memory matter more than the demos suggest. Real automation needs persistent state, recoverability, and context that does not live in one giant prompt. Look for graph-like orchestration or, at minimum, a clean way to checkpoint and resume.

I design for persistent state and recoverability, with a clean way to checkpoint and resume.

Transport and security show up the moment you leave local function calls. Remote tools, sandboxes, or browser automation change the threat model. Pick a path that handles local and remote execution with permissions you can audit.

And avoid lock-in. AI protocols are evolving. Keep tools portable and treat your orchestration as glue, not cement. That way your investment is in the tools and data, not the framework quirks.

Pick a starter stack that will not box you in

If I were starting today, I would pick a mainstream model provider I trust and learn their tool calling well. Then I would add a thin orchestration layer that supports branching, retries, and explicit schemas. I keep tools as small functions or microservices I could call outside the agent loop.

My workflow is boring on purpose: test a short loop with one tool locally, introduce a stateful runner with logging, then add a second tool that requires a network call. If those three phases are observable and solid, the rest scales.

I start with one tool locally, add a stateful runner with logging, then introduce a network call when the loop is solid.

A dead-simple weekend agent that actually teaches you

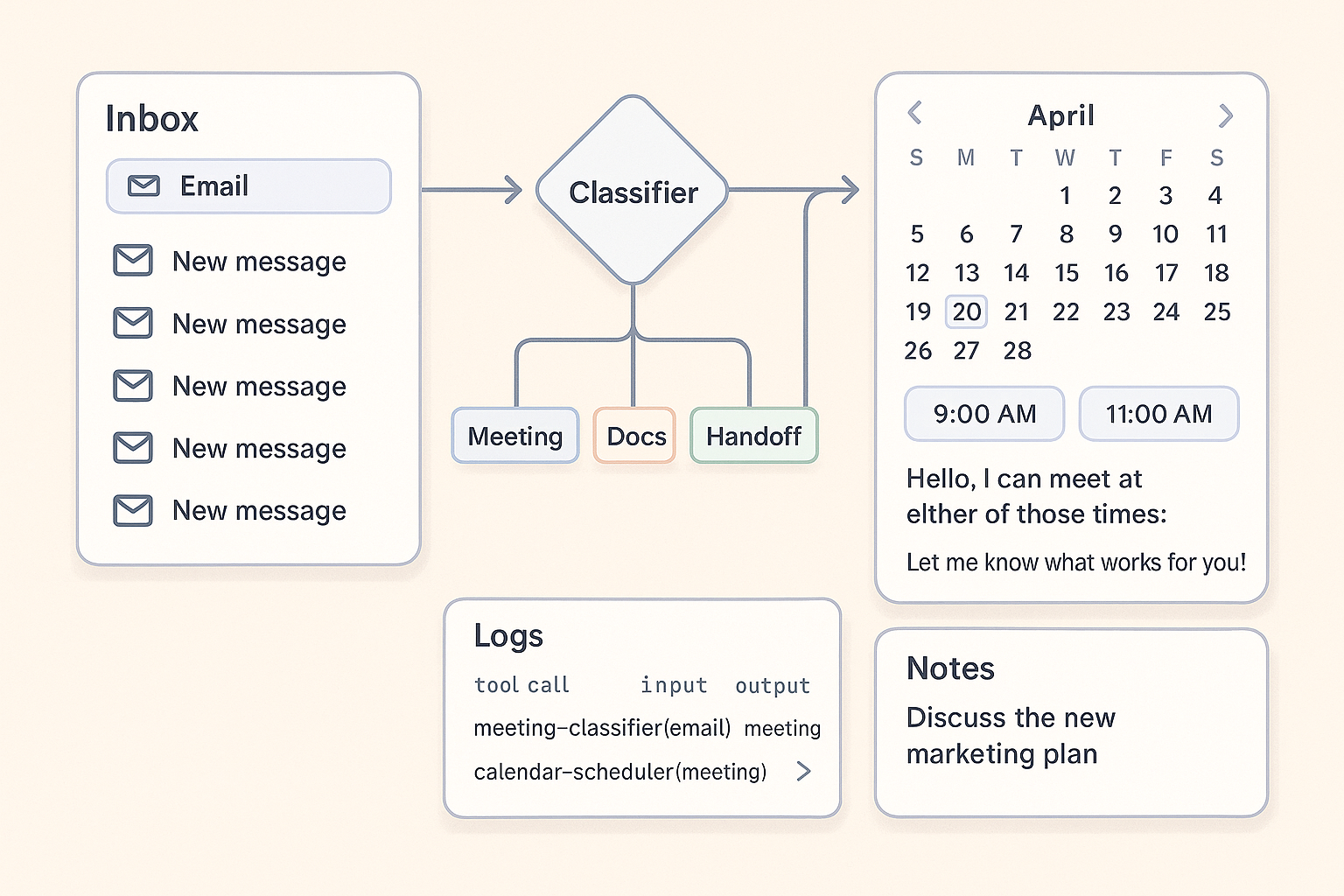

Build a small assistant that handles inbound email requests to schedule meetings and file notes. It is perfect for beginners and still useful if you already live in automation.

Here is the flow I use: watch a mailbox label for new messages, classify the intent as meeting, document request, or handoff. For meetings, extract who, when, and topic, check a calendar API, propose two time options, draft a reply with a template, and file a summary to your notes app with the original email and extracted fields. For document requests, pull from a short list of approved links, draft a reply with two options, and log what was sent. For handoffs, forward the message and attach a short brief with context and suggested next steps.

This one project forces you to practice tool calling, structured extraction, and decision branches. You can build the skeleton in a day, then use the second day to add guardrails and observability.

Minimal tech choices that will not age badly

- Language: Python or TypeScript, strong ecosystems for agents and HTTP services.

- Orchestration: something that defines steps as a chain or graph with retries and logging, not the fanciest thing, just visible state.

- Tools: wrap calendar, notes, and email as small, schema-described functions or microservices you can call outside the agent.

- Observability: log every tool call with inputs, outputs, and a short reason to make debugging painless.

Be thoughtful about privacy. Redact sensitive fields in logs, avoid storing full bodies unless you must, and add a quick approval step for outbound messages in week one. When you trust it, switch to auto-send for low-risk replies.

I redact sensitive fields, store only what I must, and add a quick approval step before any outbound message in the first week.

Where this is heading

We are moving toward action-oriented search and interoperable agents. The SAGE angle pushes content toward utility that agents can actually use. The AI protocols discussion shows the industry inching toward saner ways to connect models to tools without brittle one-offs.

What I am watching next: whether agentic evaluations show up in user-facing search with step outlines, tool calls, and cited sources, which would make utility the ranking signal to beat. I am also watching for convergence around secure tool invocation and stateful orchestration, because teams are tired of rebuilding plumbing.

If you are new to agentic AI, this is a great moment. Build something tiny, make it observable, and keep your tools portable. If you publish online, treat your content like a component an agent can run with. That mindset will age well, regardless of which AI protocols end up on top.