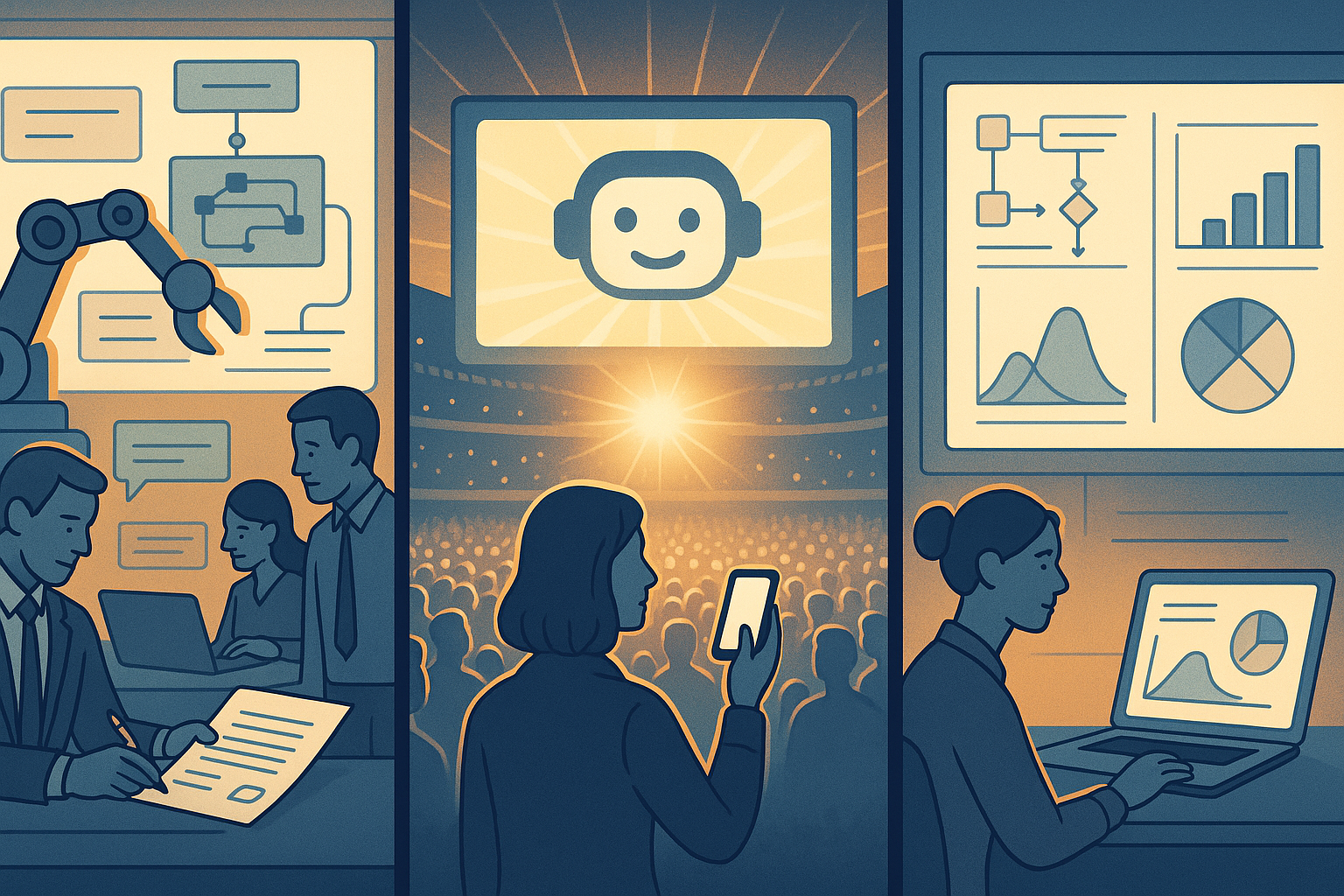

Agentic AI just hit a point of no return this weekend, and I felt it in my gut.

Agentic AI is no longer a demo. Over Feb 7 and 8, 2026, we got enterprise consolidation, a Super Bowl moment, and real research tooling. I spent the weekend in the updates and came away convinced this changes how I’ll work this year.

Quick answer

Agentic AI is moving from chat to action. In 48 hours, UiPath said it would buy WorkFusion to bolster agentic automation, AI.com ran a Super Bowl ad for a consumer agentic platform, and Google’s PaperBanana showed off publish-ready, agent-made diagrams and plots. If you start with small, verifiable workflows this week, you’ll feel the compounding time savings fast.

What is agentic AI, in plain English

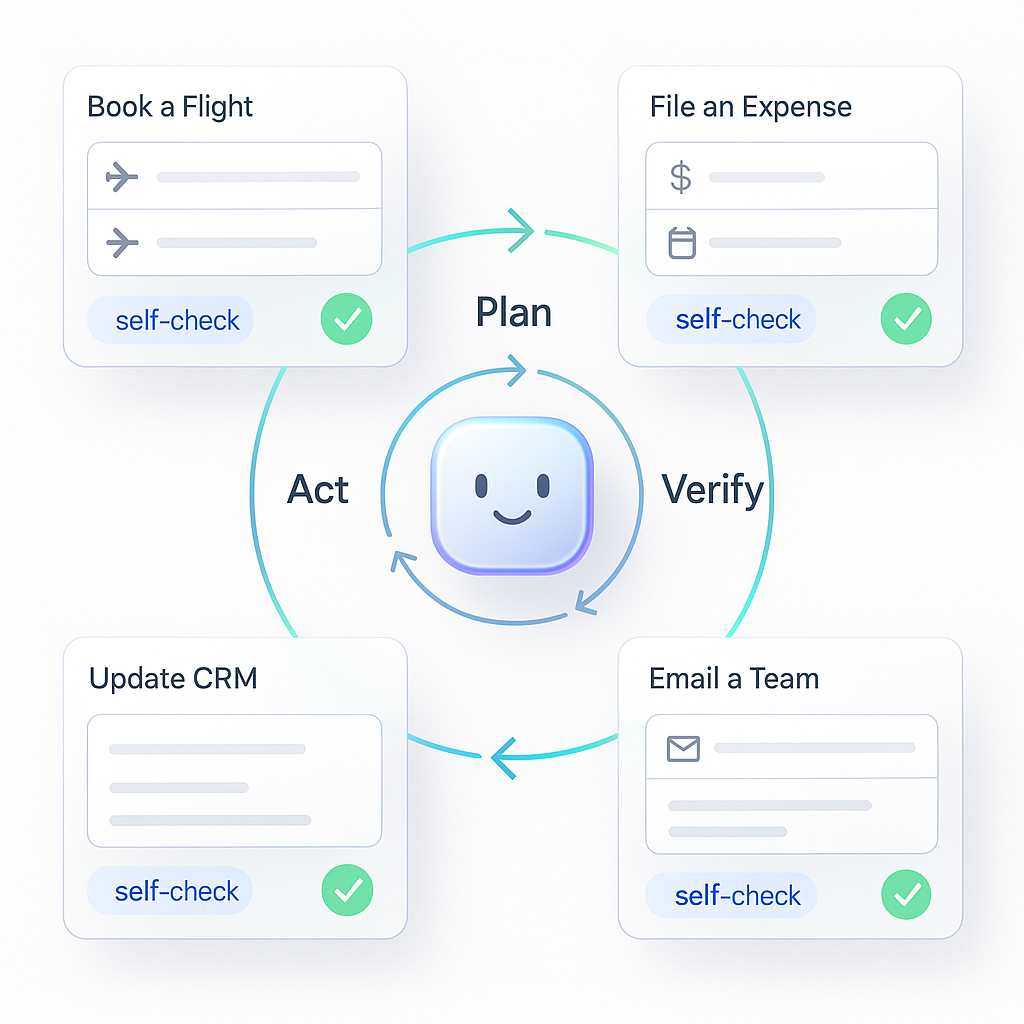

An agent doesn’t just answer. It decides what to do next to reach a goal, runs actions across tools, checks its own work, and tries again. Think of the assistant that books the flight, files the expense, updates the CRM, and emails the team without you babysitting every click. That loop of plan, act, and verify is the whole game.

I keep it simple: plan, act, verify, because that loop is the whole game.

5 weekend signals that changed the mood

UiPath to acquire WorkFusion on Feb 8, 2026

UiPath announced plans to acquire WorkFusion to strengthen its agentic AI portfolio. If you’ve ever wrestled brittle RPA, you know why this matters. Classic automation is great at repetitive clicks. Agentic automation handles messy edge cases, then checks itself. The combo is where adoption explodes. I read the news here: Insider Monkey report.

Why I care: big companies are done waiting for perfect. They’re stitching agentic reasoning into the systems that already run finance, HR, and ops. If you want an AI or automation role in 2026, fluency in agent plus workflow thinking is a career unlock.

If you want an AI or automation role in 2026, get fluent in agent plus workflow thinking.

AI.com bought a Super Bowl spotlight on Feb 8, 2026

Ad Age covered AI.com’s Super Bowl ad for a consumer agentic platform. That kind of attention shifts expectations. People will ask software to just handle bills, reschedule appointments, buy the thing, and return it if needed. Work tools will have to meet that bar.

Why I care: consumer-grade experiences force simplicity. The best agentic products this year will feel like texting a reliable doer, not programming a robot. I aim my builds at tasks I’d trust on a hectic Monday.

Google’s PaperBanana landed for researchers on Feb 7, 2026

MarkTechPost highlighted Google AI’s PaperBanana, an agentic framework that automates publication-ready methodology diagrams and statistical plots. Not just generating a chart, but choosing the right chart for the story, then polishing it for a style guide. Read it here: PaperBanana overview.

Why I care: this is where agents stop being toys. Researchers and analysts lose hours to figure plotting and diagram cleanup. If an agent can take intent, iterate options, and meet your style rules, it saves real time.

I look for agents that take intent, iterate options, and meet my style rules, because that saves real time.

Ecommerce is quietly re-architecting on Feb 7, 2026

Unified commerce plus agents is set to define ecommerce in 2026. When storefronts, inventory, profiles, support, and marketing speak the same data language, agents can test offers, triage tickets, and route logistics in concert. Entry-level work shifts from pulling CSVs to supervising safe micro-experiments.

Healthcare is moving from chat to action on Feb 8, 2026

Healthcare teams are pushing beyond note summaries to real task routing. Think triaging admin backlogs, prepping prior auths, drafting patient instructions, and logging every decision. Small gains matter here, and the safety patterns built for clinics will lift the whole field.

If you’re starting from zero this week

Pick one outcome that actually matters to you, keep the playground small, and let the agent iterate under supervision. You do not need a PhD. You need a repeatable task, a clear definition of done, and a way to check the work without breaking anything.

Pick one outcome that actually matters, keep the playground small, and let the agent iterate under supervision.

The tiny rule that changed how I build

I stopped asking, “Can the model do this?” I started asking, How can the system guarantee a good outcome? My loop is always the same: plan, act, verify. Even if the AI stumbles, the verify loop saves me. I write that loop down before I build anything.

Try this this week

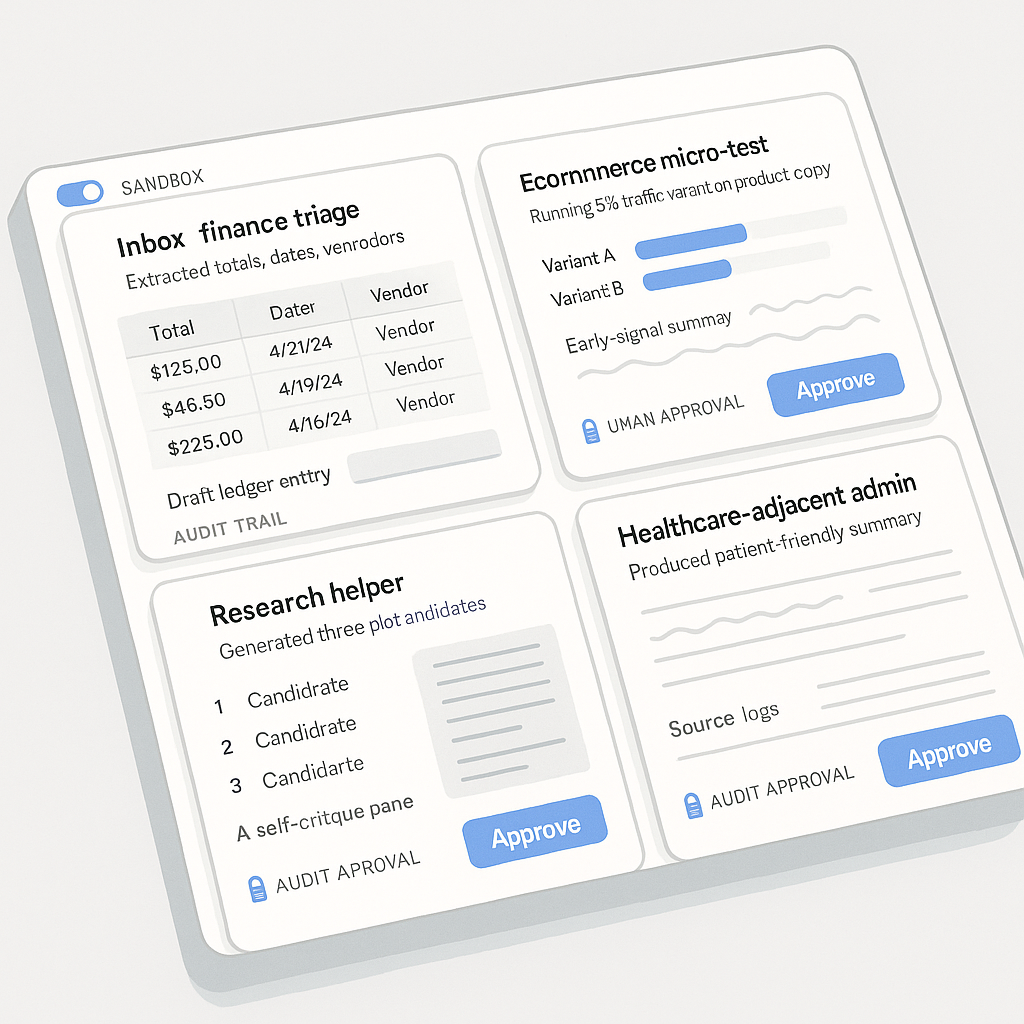

Make it boringly specific and safe. Here are the exact starter tasks I’d pick right now:

- Inbox finance triage: extract totals, due dates, and vendor names from receipts and invoices, then draft a ledger entry for approval.

- Ecommerce micro-tests: propose three product description tweaks, simulate on 5 percent of traffic, then summarize early signals for review.

- Research helper: given a dataset and question, generate three plot candidates plus a one-paragraph takeaway, then self-critique the chart choice.

- Healthcare-adjacent admin: for a public guideline or FAQ, produce a patient-friendly summary and log every source for quick spot checks.

I make it boringly specific and safe so I can verify quickly and learn fast.

My simple beginner setup

I keep one place to define the goal and steps, usually a short doc with a checklist the agent must follow. I write the definition of done in a single sentence at the top.

I keep one place to run actions, like API-accessible tools or a browser runner. I start in read-only or sandbox mode to watch behavior without risk.

I keep one place to verify, like small unit tests for data transforms or a human approval step for anything user-facing. If plan, actions, and verification are traceable, I can scale with confidence.

If plan, actions, and verification are traceable, I can scale with confidence.

What I’m watching next

I’m documenting sharper definitions of done. It sounds dull, but precision turns agents from clever to useful. I’m also insisting on human-in-the-loop for anything brand-facing. It might slow me down, but it pays back in trust. Finally, I’m running quick postmortems when an agent fails. I ask where plan, action, or verification broke, then shore up that loop for next time.

FAQ

What is the difference between generative AI and agentic AI?

Generative AI creates content based on prompts. Agentic AI goes further by deciding next steps, taking actions across tools, and verifying outcomes against a goal. In practice, that means fewer handoffs and more done-for-you results you can actually ship.

How do I pick my first agentic workflow?

Choose something you already do weekly, with a clear definition of done, low blast radius, and easy verification. Finance triage, content variants, and research plots are great starters. If you cannot check it in under a minute, it is too big for round one.

How do I keep agentic AI safe at work?

Start in read-only or sandbox modes. Require proposals before changes, simulate on a small slice, and add human approvals for anything external. Log every action and decision so you can audit quickly. Guardrails beat heroics.

Do I need new tools to use agentic AI?

Not at first. Most value comes from clearer specs and a verify loop. Use the tools you have, expose what you can via APIs or browser automation, and iterate. As the workload stabilizes, then consider dedicated orchestration or RPA-plus-agent stacks.

Bottom line

Agentic AI just crossed a line. Over Feb 7 and 8, 2026, we saw enterprise momentum, mainstream attention, and sharper tools. If you have been waiting for the right time, this is it. Pick one workflow, keep the scope kind, and let plan, act, verify carry the load. I’ll share my own build next week, warts and all.