Agentic AI workflows finally clicked for me this week. Not smarter chats, but small, reliable lanes that plan, call tools, and finish jobs while I keep the keys.

Quick answer

If you want a fast win with agentic AI workflows, start tiny. Use GitHub’s Agentic Workflows announced on Feb 13 to automate one repeatable dev task, design basic oversight from day one, follow Palo Alto’s governance guidance from Feb 14, and try a single voice micro-agent that completes one real support task end to end. Ship small, measure, then stack a second lane.

I always start tiny and ship small, measure, then stack a second lane.

GitHub Agentic Workflows landed Feb 13 and changed my dev rhythm

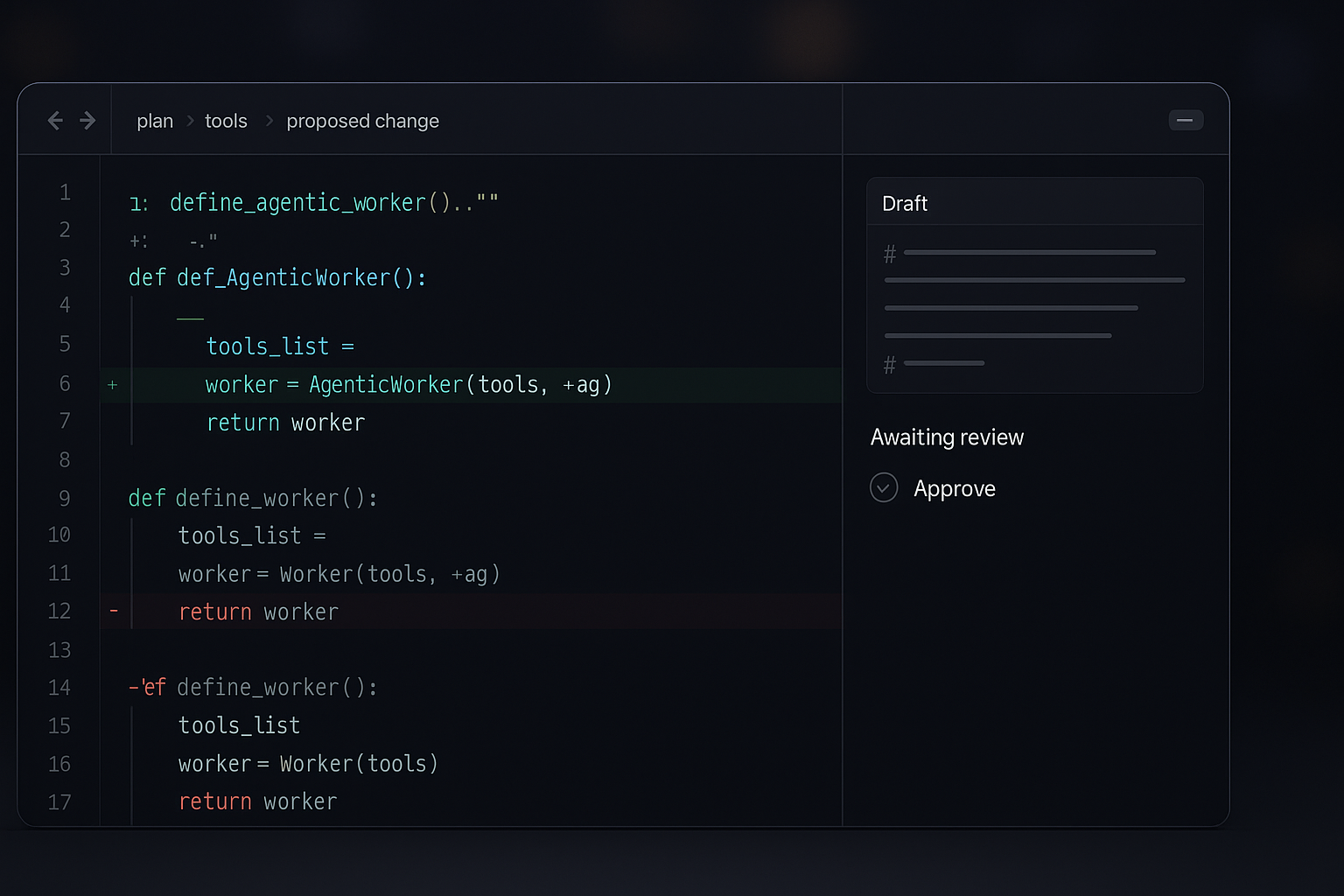

On Feb 13, GitHub put Agentic Workflows in technical preview. The big shift for me is rhythm. Instead of ask model, paste result, I define a mission and let the agent do the boring parts while I approve the last step. Think of it as an AI lane beside CI and CD that can plan, call tools, and follow through on a goal. Here’s the GitHub announcement I read.

For me, one trustworthy lane leads to two, then three, and that’s when automation becomes my default.

The simplest way I’d start is with a label like needs-docs. When it appears, the agent scans the diff, updates the README section, and opens a draft PR comment with the proposed snippet. I still review and merge, but I don’t spend my morning hunting for wording or links. One trustworthy lane leads to two, then three. That’s when automation becomes your default.

The State Department’s Feb 13 signal: agents in high-stakes environments

Also on Feb 13, FedScoop reported that the U.S. State Department is gearing up to roll out agentic AI. That got my attention, because government programs do not flip switches lightly. It tells me these systems aren’t just startup toys. They’re crossing into compliance-heavy environments where audit trails, bounded tools, and clear accountability are non-negotiable. Here’s the FedScoop piece I’d show a skeptical boss.

My practical takeaway for new projects is simple: design for oversight from day one. Clear goals, tight tool bounds, human approval for irreversible actions, and readable logs. If a federal rollout is comfortable, it’s because the basics are nailed.

I design for oversight from day one with clear goals, tight tool bounds, human approvals, and readable logs.

Governance that won’t slow you down, thanks to Feb 14 guidance

On Feb 14, Palo Alto Networks published a guide that finally reads like lived experience instead of theory. The gist maps to my own scars: least privilege for agents, make decisions legible, and test agents like code. This governance guide is the one I’d bookmark.

My minimum viable governance kit

- Tool allowlist with hard fail on anything not approved.

- Human approval before payments, deployments, or PII access.

- Single kill switch to freeze external actions instantly.

- Small eval harness with expected outcomes before each update.

- Readable logs for prompts, tools, inputs, outputs, and final approver.

If you add one thing today, make it an audit log. The first time someone asks why an agent did something, you’ll have an answer instead of a guess.

If I only add one thing today, it’s an audit log so I can explain every agent action.

Voice support is moving from IVR to real agentic workflows

Industry coverage on Feb 13 highlighted how contact centers are shifting from rigid menus to agents that actually do work for callers. I tried a scrappy version with a small client last month. The trick wasn’t a fancy model. It was a tight playbook and a single clear goal: verify a customer and resolve a billing address change unless the risk score flags it. Calls got shorter and humans handled the hairy edge cases.

If you want a safe first step, build one micro flow that completes exactly one real task end to end. No freestyling. Measure one metric you care about, like average handle time or first contact resolution. If it moves, you’re on track.

I start with one narrow micro flow that finishes a real task end to end and I track a single metric to prove it works.

What I’m actually doing this weekend

I learn by shipping. I’m wiring a small GitHub agentic lane that updates a docs snippet when I add a label, tagging me for review and stopping there. I’m wrapping it with the governance kit above and logging every step to JSON. I’m also prototyping a voice micro-agent in a sandbox that can verify a user and change a notification preference, with a clean human handoff whenever risk feels off.

How it fits without feeling overwhelming

At first, agentic AI felt like sci-fi. Now it feels like power tools I keep in one box. The Feb 13 GitHub preview is the socket wrench. The State Department signal the same day is the reason to read the manual. The Feb 14 governance guide is the manual with pictures. My mental model is four words: goals, tools, guardrails, logs. If I can answer those, I ship. If I can’t, it’s still a sketch.

FAQ

What are agentic AI workflows in plain English?

They are small, goal-driven automations where an AI plans steps, calls approved tools, and executes toward a clear outcome while you keep approval rights. Think repeatable missions, not open-ended chats.

How do I start with agentic AI workflows in GitHub?

Pick one routine task, like updating docs on a labeled PR. Use the Feb 13 technical preview to watch for a trigger, let the agent update a snippet, and have it open a draft comment for your review. Keep scope tiny and build trust.

What governance do I need on day one?

Least privilege, human approval before anything irreversible, a kill switch, a tiny eval harness, and a readable audit log. These basics make incidents explainable and reduce surprises in production.

Where does voice AI make sense first?

Start with one narrow, high-volume task like account verification or a simple profile change. Give the agent a strict playbook and send edge cases to a human with a clean summary. Measure a single metric to judge success.

Final thought

This week made agentic AI feel like a to-do list, not hype. Ship one tiny GitHub lane, add basic governance, and try a single voice task with a real outcome. You’ll learn more in two days than a month of reading, and you’ll have a foundation you can actually trust.